Africa’s First Multilingual Small Language Model (SLM) Gets Even Smaller – Thanks to Top African Innovators

Africa’s first multilingual Small Language Model (SLM), InkubaLM, has been reduced in size by up to 75% without sacrificing performance. This milestone demonstrates how deliberate system design and optimisation can deliver scalable language AI under real-world constraints, particularly in environments where compute availability, connectivity, and device capability vary widely.

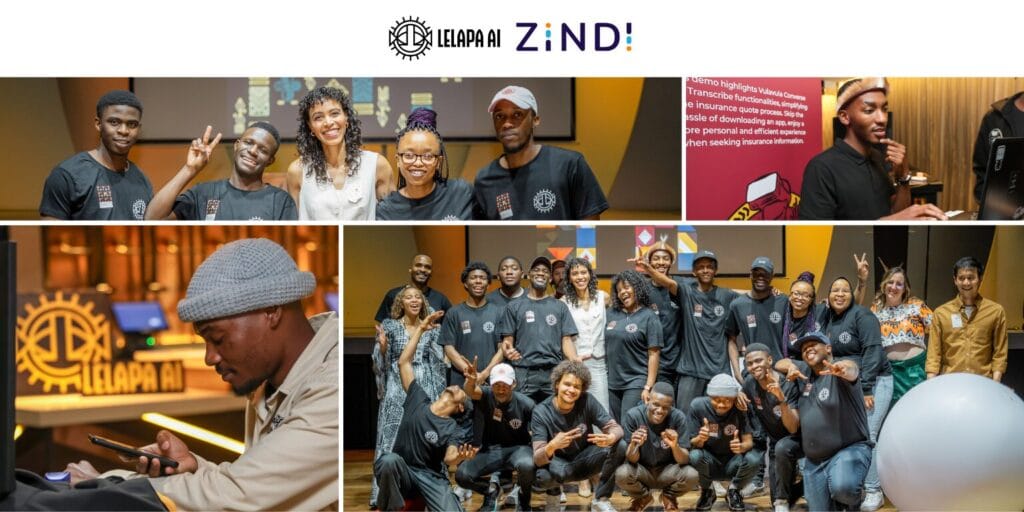

This remarkable achievement emerged from the Buzuzu-Mavi Challenge, a collaboration between Lelapa AI and Zindi that brought together over 490 data scientists, researchers, and machine learning engineers from 61 countries. The challenge focused on a clear technical goal: reduce InkubaLM’s size and computational footprint while preserving its translation and language understanding performance.

Why Size (Still) Matters

In many real-world environments, innovation must align with infrastructure realities. Across large parts of Africa and the Global South, access to high-end devices, stable connectivity, and sustained cloud compute remains uneven. In these contexts, language models designed for efficiency rather than scale-by-size are better suited for deployment and long-term use.

Resource-efficient models such as InkubaLM enable practical deployment across sectors by reducing reliance on constant connectivity and heavy cloud infrastructure. This unlocks real-world use cases such as:

- A farmer accessing climate updates in their home language

- Students using AI-powered educational tools on budget smartphones

- Call centres and clinics offering multilingual support without cloud dependency

Originally developed to support languages such as isiXhosa, Swahili, and Hausa, InkubaLM became significantly more compact through the optimisation techniques applied during the challenge. Participants reduced the model’s size by up to 75% while maintaining strong translation quality, reinforcing the role of architectural efficiency in scalable language AI.

The Winners

The winning solutions demonstrated how targeted optimisation techniques can dramatically reduce model size while preserving performance across multilingual tasks.

1st Place: Yvan Carré (Cameroon) Used adapter heads, quantisation and knowledge distillation to create a compressed, adaptive and efficient version of InkubaLM that performed well across tasks.

2nd Place: Stefan Strydom (South Africa) Aggressively shrank the model to just 40M parameters by trimming vocab size, reducing layer depth, and using shared embeddings.

3rd Place: Team AI_Buzz – Abdourahamane Ide Salifou, Mubarak Muhammad, and Victor Olufemi (Nigeria and Niger) Blended multiple datasets and distilled a performant student model with just 177M parameters.

The Buzuzu-Mavi Challenge reaffirmed what we’ve always believed at Lelapa AI, Africa has the talent. All five winners were African, a powerful testament to the depth of machine learning and data science expertise across the continent.

In Their Words

“Language is more than just communication, it’s a carrier of culture, identity, and knowledge. By supporting low-resource languages, we empower communities to participate fully in the digital world.” – Yvan Carré

“Building tools for people in their own languages is critical to making the technology accessible to more people.” – Stefan Strydom

Across the winning entries, participants consistently emphasised the importance of building language AI systems that perform reliably in real-world conditions. Their approaches reflected a shared focus on efficiency, adaptability, and practical deployment across diverse linguistic and technical environments.

A Step Forward for African AI

As Lelapa AI’s CEO and co-founder, Pelonomi Moiloa, notes: “Optimising language models under real-world constraints is a technical challenge with global relevance. By focusing on efficiency, adaptability, and deployment realities, we are building language systems that can scale beyond research environments and into everyday use.”

And from Zindi’s CEO and co-founder, Celina Lee: “It is a joy and a privilege for us at Zindi to partner with Lelapa AI on the Buzuzu-Mavi Challenge. Seeing the impact that our incredible community of AI builders can have on a truly African problem is inspiring and rewarding in its own right, but even better, these solutions showcase what African innovators can do in the language model space. In a world where the state of the art requires ever larger language models, we’re proud to show the world that more can be done with less.”

What’s Next?

Several of the top submissions will be integrated into future iterations of InkubaLM. While InkubaLM remains a base model rather than a production system, it serves as a foundational building block for efficient multilingual language applications. Its open-source availability enables further experimentation, optimisation, and fine-tuning for real-world deployment beyond Africa and the Global South.

→ Explore InkubaLM on HuggingFace

The challenge may be over. But the mission to make African AI smaller, smarter, and more inclusive has only just begun.

Note: The original development of InkubaLM was supported by compute provided through Microsoft’s AI for Good initiative.